Logging is a important but often overlooked part of an organization’s security posture. Logging without organization, searchability, or reporting leads to data being missed. This a continuation of a longer series that VDA Labs is writing on Graylog. This is part 2 of a multi-part series covering a variety of topics, including the following items:

Installation, securing, and optimizing the setup part 1

Installation, securing, and optimizing the setup part 2

Domain Controller/DHCP log collection and alerts

File/print server log collection and alerts

Exchange server log collection

IIS log collection

Firewall log collection

Setting up Threat Intelligence

SQL Server

This week focuses on securing the Graylog web interface and some basic optimization for Graylog.

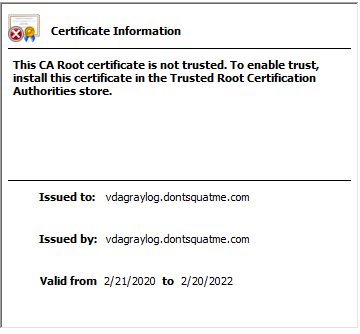

In our last blog, VDA covered setting up and securing MongoDB and Elasticseach for use with Graylog. We also installed Graylog and connected to our MongoDB and Elasticsearch instances securely using TLS. Now, we need to focus on securing the web and API interfaces of our Graylog instance. To do this, we first need need to generate our new Graylog certificate and import the corresponding key.

To start, we will need to generate a new certificate request. This can be done by creating a file named openssl-graylog.cnf. Inside of this file, we want the following items:

[req] distinguished_name = req_distinguished_name x509_extensions = v3_req prompt = no # Details about the issuer of the certificate [req_distinguished_name] C = US ST = Some-State L = Some-City O = My Company OU = My Division CN = graylog.example.com [v3_req] keyUsage = keyEncipherment, dataEncipherment extendedKeyUsage = serverAuth subjectAltName = @alt_names # IP addresses and DNS names the certificate should include # Use IP.### for IP addresses and DNS.### for DNS names, # with "###" being a consecutive number. [alt_names] IP.1 = 203.0.113.42 DNS.1 = graylog.example.com

With our request created, we can start the process of generating our new certificates and importing our files into the keystore.

With our request created, we can start the process of generating our new certificates and importing our files into the keystore.